Preface

We will be using an open dataset from the popular site Kaggle. This European Soccer Database has more than 25,000 matches and more than 10,000 players for European professional soccer seasons from 2008 to 2016.

Dataset

We will be using an open dataset from the popular site Kaggle. This European Soccer Database has more than 25,000 matches and more than 10,000 players for European professional soccer seasons from 2008 to 2016.

Although we won’t be getting into the details of it for our example, the dataset even has attributes on weekly game updates, team line up, and detailed match events.

The goal of this notebook is to walk you through an end to end process of analyzing a dataset and introduce you to what we will be covering in this course. Our simple analytical process will include some steps for exploring and cleaning our dataset, some steps for predicting player performance using basic statistics, and some steps for grouping similar clusters using machine learning.

Getting Started

To get started, we will need to:

- Download the data from: https://www.kaggle.com/hugomathien/soccer

- Extract the zip file called "soccer.zip"

Import Libraries

We will start by importing the Python libraries we will be using in this analysis. These libraries include: sqllite3 for interacting with a local relational database pandas and numpy for data ingestion and manipulation matplotlib for data visualization specific methods from sklearn for Machine Learning and customplot, which contains custom functions we have written for this notebook

import sqlite3

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.cluster import KMeans

from sklearn.preprocessing import scale

from customplot import *

Ingest Data

Now, we will need to read the dataset using the commands below.

Note: Make sure you run the import cell above (shift+enter) before you run the data ingest code below.

df is a variable pointing to a pandas data frame.

# Create your connection.

cnx = sqlite3.connect('database.sqlite')

df = pd.read_sql_query("SELECT * FROM Player_Attributes", cnx)

Exploring Data

We will start our data exploration by generating simple statistics of the data. Let us look at what the data columns are using a pandas attribute called “columns”.

df.columns

Index(['id', 'player_fifa_api_id', 'player_api_id', 'date', 'overall_rating',

'potential', 'preferred_foot', 'attacking_work_rate',

'defensive_work_rate', 'crossing', 'finishing', 'heading_accuracy',

'short_passing', 'volleys', 'dribbling', 'curve', 'free_kick_accuracy',

'long_passing', 'ball_control', 'acceleration', 'sprint_speed',

'agility', 'reactions', 'balance', 'shot_power', 'jumping', 'stamina',

'strength', 'long_shots', 'aggression', 'interceptions', 'positioning',

'vision', 'penalties', 'marking', 'standing_tackle', 'sliding_tackle',

'gk_diving', 'gk_handling', 'gk_kicking', 'gk_positioning',

'gk_reflexes'],

dtype='object')

Next will display simple statistics of our dataset. You need to run each cell to make sure you see the outputs.

df.describe().transpose()

| count | mean | std | min | 25% | 50% | 75% | max | |

|---|---|---|---|---|---|---|---|---|

| id | 183978.0 | 91989.500000 | 53110.018250 | 1.0 | 45995.25 | 91989.5 | 137983.75 | 183978.0 |

| player_fifa_api_id | 183978.0 | 165671.524291 | 53851.094769 | 2.0 | 155798.00 | 183488.0 | 199848.00 | 234141.0 |

| player_api_id | 183978.0 | 135900.617324 | 136927.840510 | 2625.0 | 34763.00 | 77741.0 | 191080.00 | 750584.0 |

| overall_rating | 183142.0 | 68.600015 | 7.041139 | 33.0 | 64.00 | 69.0 | 73.00 | 94.0 |

| potential | 183142.0 | 73.460353 | 6.592271 | 39.0 | 69.00 | 74.0 | 78.00 | 97.0 |

| crossing | 183142.0 | 55.086883 | 17.242135 | 1.0 | 45.00 | 59.0 | 68.00 | 95.0 |

| finishing | 183142.0 | 49.921078 | 19.038705 | 1.0 | 34.00 | 53.0 | 65.00 | 97.0 |

| heading_accuracy | 183142.0 | 57.266023 | 16.488905 | 1.0 | 49.00 | 60.0 | 68.00 | 98.0 |

| short_passing | 183142.0 | 62.429672 | 14.194068 | 3.0 | 57.00 | 65.0 | 72.00 | 97.0 |

| volleys | 181265.0 | 49.468436 | 18.256618 | 1.0 | 35.00 | 52.0 | 64.00 | 93.0 |

| dribbling | 183142.0 | 59.175154 | 17.744688 | 1.0 | 52.00 | 64.0 | 72.00 | 97.0 |

| curve | 181265.0 | 52.965675 | 18.255788 | 2.0 | 41.00 | 56.0 | 67.00 | 94.0 |

| free_kick_accuracy | 183142.0 | 49.380950 | 17.831746 | 1.0 | 36.00 | 50.0 | 63.00 | 97.0 |

| long_passing | 183142.0 | 57.069880 | 14.394464 | 3.0 | 49.00 | 59.0 | 67.00 | 97.0 |

| ball_control | 183142.0 | 63.388879 | 15.196671 | 5.0 | 58.00 | 67.0 | 73.00 | 97.0 |

| acceleration | 183142.0 | 67.659357 | 12.983326 | 10.0 | 61.00 | 69.0 | 77.00 | 97.0 |

| sprint_speed | 183142.0 | 68.051244 | 12.569721 | 12.0 | 62.00 | 69.0 | 77.00 | 97.0 |

| agility | 181265.0 | 65.970910 | 12.954585 | 11.0 | 58.00 | 68.0 | 75.00 | 96.0 |

| reactions | 183142.0 | 66.103706 | 9.155408 | 17.0 | 61.00 | 67.0 | 72.00 | 96.0 |

| balance | 181265.0 | 65.189496 | 13.063188 | 12.0 | 58.00 | 67.0 | 74.00 | 96.0 |

| shot_power | 183142.0 | 61.808427 | 16.135143 | 2.0 | 54.00 | 65.0 | 73.00 | 97.0 |

| jumping | 181265.0 | 66.969045 | 11.006734 | 14.0 | 60.00 | 68.0 | 74.00 | 96.0 |

| stamina | 183142.0 | 67.038544 | 13.165262 | 10.0 | 61.00 | 69.0 | 76.00 | 96.0 |

| strength | 183142.0 | 67.424529 | 12.072280 | 10.0 | 60.00 | 69.0 | 76.00 | 96.0 |

| long_shots | 183142.0 | 53.339431 | 18.367025 | 1.0 | 41.00 | 58.0 | 67.00 | 96.0 |

| aggression | 183142.0 | 60.948046 | 16.089521 | 6.0 | 51.00 | 64.0 | 73.00 | 97.0 |

| interceptions | 183142.0 | 52.009271 | 19.450133 | 1.0 | 34.00 | 57.0 | 68.00 | 96.0 |

| positioning | 183142.0 | 55.786504 | 18.448292 | 2.0 | 45.00 | 60.0 | 69.00 | 96.0 |

| vision | 181265.0 | 57.873550 | 15.144086 | 1.0 | 49.00 | 60.0 | 69.00 | 97.0 |

| penalties | 183142.0 | 55.003986 | 15.546519 | 2.0 | 45.00 | 57.0 | 67.00 | 96.0 |

| marking | 183142.0 | 46.772242 | 21.227667 | 1.0 | 25.00 | 50.0 | 66.00 | 96.0 |

| standing_tackle | 183142.0 | 50.351257 | 21.483706 | 1.0 | 29.00 | 56.0 | 69.00 | 95.0 |

| sliding_tackle | 181265.0 | 48.001462 | 21.598778 | 2.0 | 25.00 | 53.0 | 67.00 | 95.0 |

| gk_diving | 183142.0 | 14.704393 | 16.865467 | 1.0 | 7.00 | 10.0 | 13.00 | 94.0 |

| gk_handling | 183142.0 | 16.063612 | 15.867382 | 1.0 | 8.00 | 11.0 | 15.00 | 93.0 |

| gk_kicking | 183142.0 | 20.998362 | 21.452980 | 1.0 | 8.00 | 12.0 | 15.00 | 97.0 |

| gk_positioning | 183142.0 | 16.132154 | 16.099175 | 1.0 | 8.00 | 11.0 | 15.00 | 96.0 |

| gk_reflexes | 183142.0 | 16.441439 | 17.198155 | 1.0 | 8.00 | 11.0 | 15.00 | 96.0 |

Data Cleaning: Handling Missing Data

Real data is never clean. We need to make sure we clean the data by converting or getting rid of null or missing values.

The next code cell will show you if any of the 183978 rows have null value in one of the 42 columns.

#is any row NULL ?

df.isnull().any().any(), df.shape

(True, (183978, 42))

Now let’s try to find how many data points in each column are null.

df.isnull().sum(axis=0)

id 0

player_fifa_api_id 0

player_api_id 0

date 0

overall_rating 836

potential 836

preferred_foot 836

attacking_work_rate 3230

defensive_work_rate 836

crossing 836

finishing 836

heading_accuracy 836

short_passing 836

volleys 2713

dribbling 836

curve 2713

free_kick_accuracy 836

long_passing 836

ball_control 836

acceleration 836

sprint_speed 836

agility 2713

reactions 836

balance 2713

shot_power 836

jumping 2713

stamina 836

strength 836

long_shots 836

aggression 836

interceptions 836

positioning 836

vision 2713

penalties 836

marking 836

standing_tackle 836

sliding_tackle 2713

gk_diving 836

gk_handling 836

gk_kicking 836

gk_positioning 836

gk_reflexes 836

dtype: int64

Fixing Null Values by Deleting Them

In our next two lines, we will drop the null values by going through each row.

# Fix it

# Take initial # of rows

rows = df.shape[0]

# Drop the NULL rows

df = df.dropna()

Now if we check the null values and number of rows, we will see that there are no null values and number of rows decreased accordingly.

#Check if all NULLS are gone ?

print(rows)

df.isnull().any().any(), df.shape

183978

(False, (180354, 42))

To find exactly how many lines we removed, we need to subtract the current number of rows in our data frame from the original number of rows.

#How many rows with NULL values?

rows - df.shape[0]

3624

Our data table has many lines as you have seen. We can only look at few lines at once. Instead of looking at same top 10 lines every time, we shuffle - so we get to see different random sample on top. This way, we make sure the data is not in any particular order when we try sampling from it (like taking top or bottom few rows) by randomly shuffling the rows.

#Shuffle the rows of df so we get a distributed sample when we display top few rows

df = df.reindex(np.random.permutation(df.index))

Predicting: ‘overall_rating’ of a player, now that our data cleaning step is reasonably complete and we can trust and understand the data more, we will start diving into the dataset further.

Let’s take a look at top few rows.

We will use the head function for data frames for this task. This gives us every column in every row.

df.head(5)

| id | player_fifa_api_id | player_api_id | date | overall_rating | potential | preferred_foot | attacking_work_rate | defensive_work_rate | crossing | ... | vision | penalties | marking | standing_tackle | sliding_tackle | gk_diving | gk_handling | gk_kicking | gk_positioning | gk_reflexes | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 164033 | 164034 | 229000 | 361793 | 2015-10-09 00:00:00 | 65.0 | 71.0 | left | medium | medium | 27.0 | ... | 58.0 | 63.0 | 28.0 | 27.0 | 26.0 | 14.0 | 10.0 | 14.0 | 13.0 | 12.0 |

| 175609 | 175610 | 155264 | 28284 | 2014-01-10 00:00:00 | 69.0 | 74.0 | right | medium | medium | 55.0 | ... | 59.0 | 40.0 | 69.0 | 70.0 | 67.0 | 12.0 | 15.0 | 10.0 | 14.0 | 14.0 |

| 76665 | 76666 | 167833 | 32711 | 2014-09-18 00:00:00 | 63.0 | 63.0 | right | medium | high | 62.0 | ... | 58.0 | 75.0 | 64.0 | 65.0 | 65.0 | 6.0 | 5.0 | 15.0 | 7.0 | 9.0 |

| 6407 | 6408 | 194716 | 73047 | 2015-04-10 00:00:00 | 64.0 | 66.0 | left | medium | medium | 63.0 | ... | 28.0 | 32.0 | 67.0 | 64.0 | 64.0 | 10.0 | 10.0 | 12.0 | 8.0 | 12.0 |

| 105794 | 105795 | 199110 | 196484 | 2014-02-07 00:00:00 | 76.0 | 84.0 | right | high | low | 62.0 | ... | 69.0 | 78.0 | 25.0 | 24.0 | 21.0 | 8.0 | 10.0 | 10.0 | 14.0 | 6.0 |

5 rows × 42 columns

Most of the time, we are only interested in plotting some columns. In that case, we can use the pandas column selection option as follows. Please ignore the first column in the output of the one line code below. It is the unique identifier that acts as an index for the data.

Note: From this point on, we will start referring to the columns as “features” in our description.

df[:10][['penalties', 'overall_rating']]

| penalties | overall_rating | |

|---|---|---|

| 164033 | 63.0 | 65.0 |

| 175609 | 40.0 | 69.0 |

| 76665 | 75.0 | 63.0 |

| 6407 | 32.0 | 64.0 |

| 105794 | 78.0 | 76.0 |

| 85271 | 76.0 | 78.0 |

| 29207 | 61.0 | 74.0 |

| 133409 | 58.0 | 78.0 |

| 61074 | 61.0 | 70.0 |

| 120585 | 80.0 | 76.0 |

Feature Correlation Analysis

Next, we will check if ‘penalties’ is correlated to ‘overall_rating’. We are using a similar selection operation, bu this time for all the rows and within the correlation function.

Are these correlated (using Pearson’s correlation coefficient) ?

df['overall_rating'].corr(df['penalties'])

We see that Pearson’s Correlation Coefficient for these two columns is 0.39.

Pearson goes from -1 to +1. A value of 0 would have told there is no correlation, so we shouldn’t bother looking at that attribute. A value of 0.39 shows some correlation, although it could be stronger.

At least, we have these attributes which are slightly correlated. This gives us hope that we might be able to build a meaningful predictor using these ‘weakly’ correlated features.

Next, we will create a list of features that we would like to iterate the same operation on.

Create a list of potential Features that you want to measure correlation with

potentialFeatures = ['acceleration', 'curve', 'free_kick_accuracy', 'ball_control', 'shot_power', 'stamina']

The for loop below prints out the correlation coefficient of “overall_rating” of a player with each feature we added to the list as potential.

# check how the features are correlated with the overall ratings

for f in potentialFeatures:

related = df['overall_rating'].corr(df[f])

print("%s: %f" % (f,related))

acceleration: 0.243998

curve: 0.357566

free_kick_accuracy: 0.349800

ball_control: 0.443991

shot_power: 0.428053

stamina: 0.325606

Which features have the highest correlation with overall_rating?

Looking at the values printed by the previous cell, we notice that the to two are “ball_control” (0.44) and “shot_power” (0.43). So these two features seem to have higher correlation with “overall_rating”.

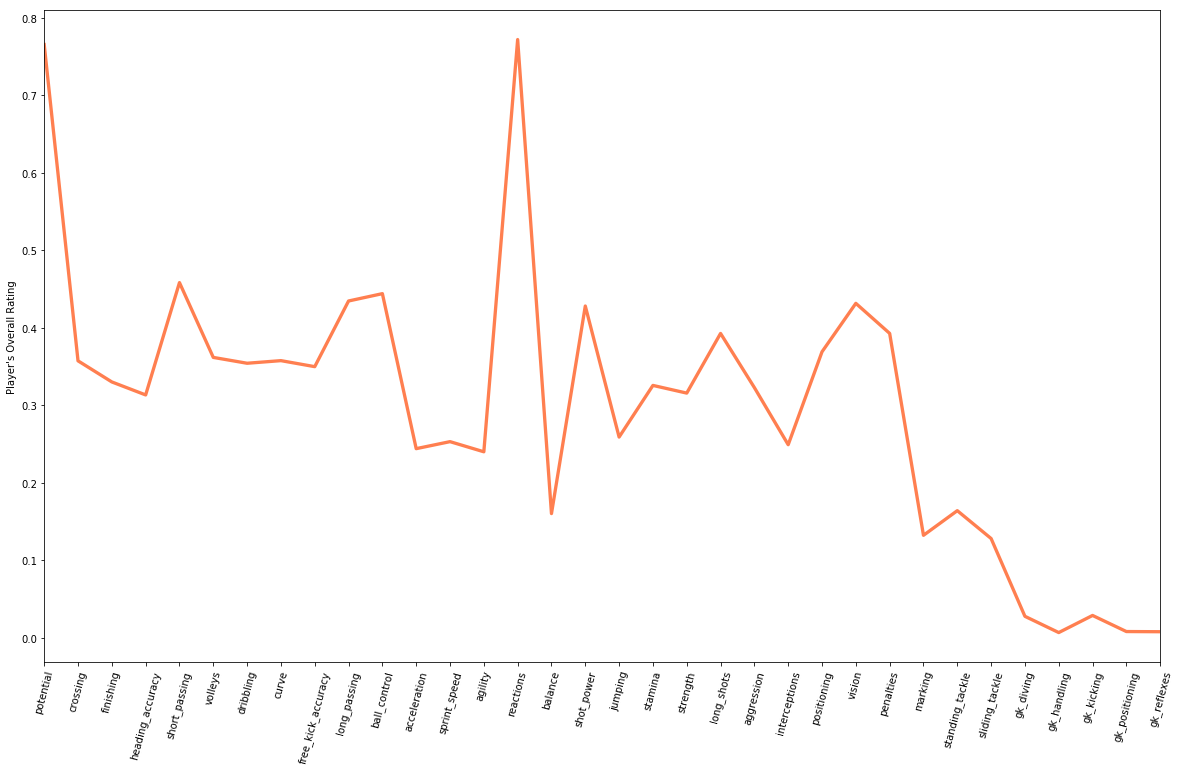

Data Visualization:

Next we will start plotting the correlation coefficients of each feature with “overall_rating”. We start by selecting the columns and creating a list with correlation coefficients, called “correlations”.

cols = ['potential', 'crossing', 'finishing', 'heading_accuracy',

'short_passing', 'volleys', 'dribbling', 'curve', 'free_kick_accuracy',

'long_passing', 'ball_control', 'acceleration', 'sprint_speed',

'agility', 'reactions', 'balance', 'shot_power', 'jumping', 'stamina',

'strength', 'long_shots', 'aggression', 'interceptions', 'positioning',

'vision', 'penalties', 'marking', 'standing_tackle', 'sliding_tackle',

'gk_diving', 'gk_handling', 'gk_kicking', 'gk_positioning',

'gk_reflexes']

# create a list containing Pearson's correlation between 'overall_rating' with each column in cols

correlations = [ df['overall_rating'].corr(df[f]) for f in cols ]

len(cols), len(correlations)

(34, 34)

We make sure that the number of selected features and the correlations calculated are the same, e.g., both 34 in this case. Next couple of cells show some lines of code that use pandas plaotting functions to create a 2D graph of these correlation vealues and column names.

# create a function for plotting a dataframe with string columns and numeric values

def plot_dataframe(df, y_label):

color='coral'

fig = plt.gcf()

fig.set_size_inches(20, 12)

plt.ylabel(y_label)

ax = df2.correlation.plot(linewidth=3.3, color=color)

ax.set_xticks(df2.index)

ax.set_xticklabels(df2.attributes, rotation=75); #Notice the ; (remove it and see what happens !)

plt.show()

# create a dataframe using cols and correlations

df2 = pd.DataFrame({'attributes': cols, 'correlation': correlations})

# let's plot above dataframe using the function we created

plot_dataframe(df2, 'Player\'s Overall Rating')

Analysis of Findings

Now it is time for you to analyze what we plotted. Suppose you have to predict a player’s overall rating. Which 5 player attributes would you ask for? Hint: Which are the five features with highest correlation coefficients?

- Clustering Players into Similar Groups

Until now, we used basic statistics and correlation coefficients to start forming an opinion, but can we do better? What if we took some features and start looking at each player using those features? Can we group similar players based on these features? Let’s see how we can do this.

Note: Generally, someone with domain knowledge needs to define which features. We could have also selected some of the features with highest correlation with overall_rating. However, it does not guarantee best outcome always as we are not sure if the top five features are independent. For example, if 4 of the 5 features depend on the remaining 1 feature, taking all 5 does not give new information.

- Select Features on Which to Group Players

# Define the features you want to use for grouping players

select5features = ['gk_kicking', 'potential', 'marking', 'interceptions', 'standing_tackle']

select5features

['gk_kicking', 'potential', 'marking', 'interceptions', 'standing_tackle']

# Generate a new dataframe by selecting the features you just defined

df_select = df[select5features].copy(deep=True)

df_select.head()

| gk_kicking | potential | marking | interceptions | standing_tackle | |

|---|---|---|---|---|---|

| 164033 | 14.0 | 71.0 | 28.0 | 15.0 | 27.0 |

| 175609 | 10.0 | 74.0 | 69.0 | 74.0 | 70.0 |

| 76665 | 15.0 | 63.0 | 64.0 | 62.0 | 65.0 |

| 6407 | 12.0 | 66.0 | 67.0 | 51.0 | 64.0 |

| 105794 | 10.0 | 84.0 | 25.0 | 25.0 | 24.0 |

Perform KMeans Clustering

Now we will use a machine learning method called KMeans to cluster the values (i.e., player features on gk_kicking, potential, marking, interceptions, and standing_tackle). We will ask for four clusters. We will talk about KMeans clustering and other machine learning tools in Python in Week 7 so we won’t discuss these methods here.

# Perform scaling on the dataframe containing the features

data = scale(df_select)

# Define number of clusters

noOfClusters = 4

# Train a model

model = KMeans(init='k-means++', n_clusters=noOfClusters, n_init=20).fit(data)

print(90*'_')

print("\nCount of players in each cluster")

print(90*'_')

pd.value_counts(model.labels_, sort=False)

__________________________________________________________________________________________

Count of players in each cluster

__________________________________________________________________________________________

0 50444

1 23788

2 50218

3 55904

dtype: int64

# Create a composite dataframe for plotting

# ... Use custom function declared in customplot.py (which we imported at the beginning of this notebook)

P = pd_centers(featuresUsed=select5features, centers=model.cluster_centers_)

P

| gk_kicking | potential | marking | interceptions | standing_tackle | prediction | |

|---|---|---|---|---|---|---|

| 0 | -0.042786 | 0.705142 | 1.028476 | 0.983227 | 1.030906 | 0 |

| 1 | 1.920554 | 0.038680 | -1.110334 | -0.651640 | -1.199541 | 1 |

| 2 | -0.335917 | -0.842965 | 0.548630 | 0.407480 | 0.551229 | 2 |

| 3 | -0.477137 | 0.105603 | -0.947568 | -0.975185 | -0.914116 | 3 |

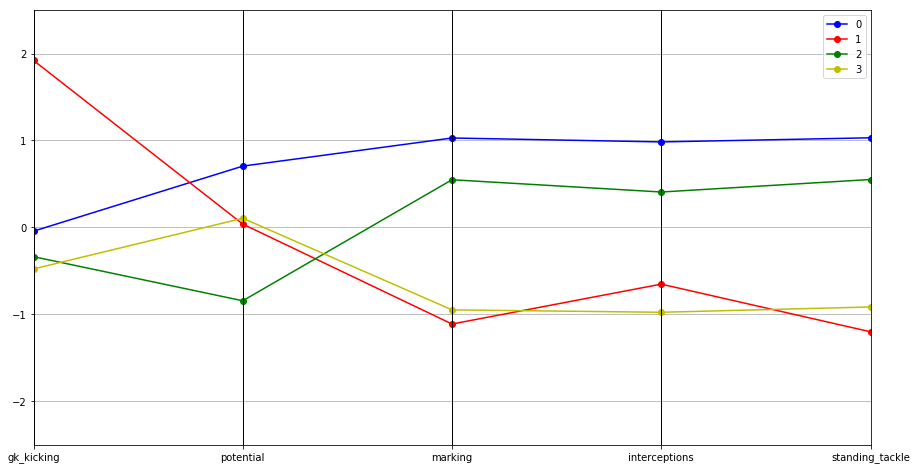

Visualization of Clusters

We now have 4 clusters based on the features we selected, we can treat them as profiles for similar groups of players. We can visualize these profiles by plotting the centers for each cluster, i.e., the average values for each featuere within the cluster. We will use matplotlib for this visualization. We will learn more about matplotlib later.

# For plotting the graph inside the notebook itself, we use the following command

%matplotlib inline

parallel_plot(P)

Analysis of Findings

- Can you identify the groups for each of the below?

Two groups are very similar except in gk_kicking - these players can coach each other on gk_kicking, where they differ Two groups are somewhat similar to each other except in potential.